Click here to buy secure, speedy, and reliable Web hosting, Cloud hosting, Agency hosting, VPS hosting, Website builder, Business email, Reach email marketing at 20% discount from our Gold Partner Hostinger You can also read 12 Top Reasons to Choose Hostinger’s Best Web Hosting

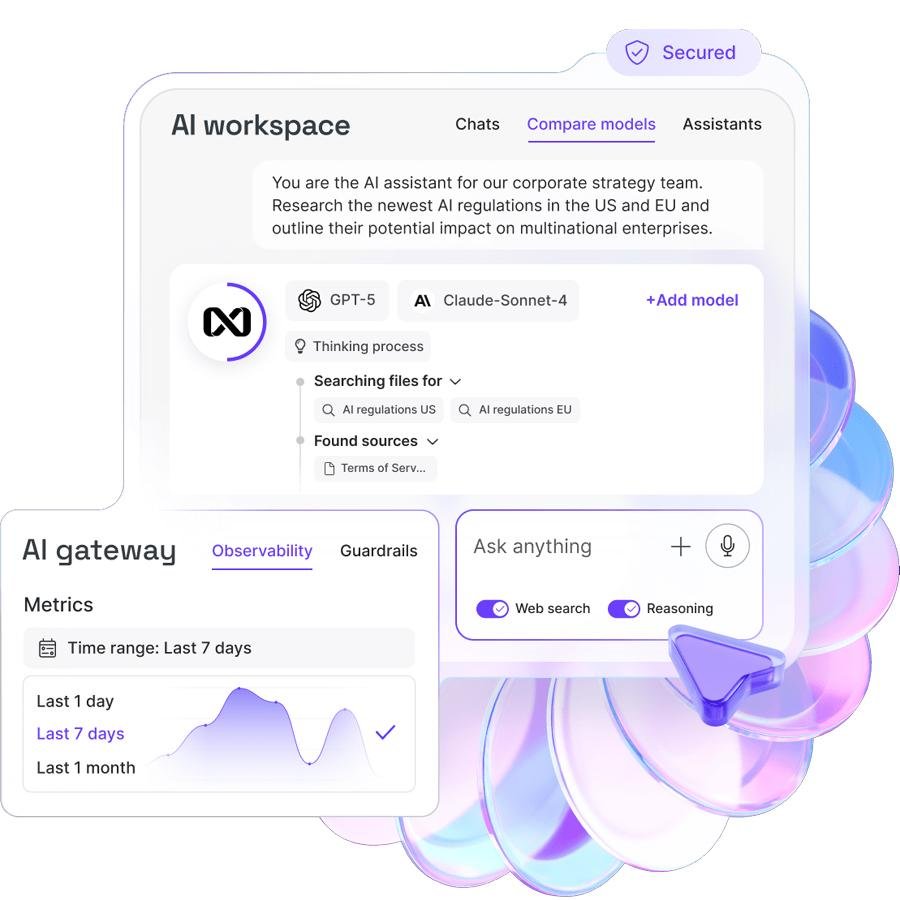

In this nexos.ai Review, teams want to use large language models but juggling separate provider consoles, unmanaged subscriptions, and ad-hoc scripts leaves security gaps, hidden costs, and patchy results. That friction grows quickly — employees leak prompts into unofficial apps, engineering spends time rewiring integrations, and product owners can’t reliably measure model behavior. nexos.ai positions itself as a single control layer — a workspace for knowledge workers and a gateway for developers — so organizations can let people use multiple LLMs from one place while keeping logs, policies, and fallbacks in view.

3 VPNs That Pass All Tests (2025)

- NordVPN: Zero leaks in tests, RAM-only servers, and Threat Protection to block malware.

- Surfshark: Unlimited devices, Camouflage Mode for bypassing VPN blocks, and CleanWeb ad-blocker.

- ExpressVPN: Trusted Server tech (data wiped on reboot) and consistent streaming access.

What is nexos.ai

nexos.ai is a unified AI platform that gives businesses access to many LLMs through one interface and one API. It combines an AI Workspace (for employees and teams) with an AI Gateway (for developers and production routing), wrapping model access in governance, observability, and enterprise controls. The vendor frames this as a way to let teams pick the best model for a task while keeping security and cost tracking centralized.

How the platform is packaged (AI Workspace vs AI Gateway)

AI Workspace — where people work with models

The AI Workspace is a browser-based hub where employees can:

Chat with and test multiple models (OpenAI, Anthropic, Google, Meta, etc.) from a single prompt window.

Compare outputs side-by-side to pick the best responses for the task.

Use documents, images, audio, and web search as inputs in the same context.

Create Assistants — reusable, role-specific bots with controlled prompts and defined behavior.

Store project artifacts and search organization data (Slack, Google Drive, Confluence) from one place.

Admins set available models, apply input/output filters, and manage budgets and teams. These controls help prevent accidental data exposure and keep usage visible.

AI Gateway — how engineers connect models to products

The AI Gateway is a single API endpoint that:

Routes requests to preferred LLMs and handles retries, fallbacks, and load balancing so apps keep working when a provider degrades.

Applies policies (timeouts, token budgets, deny-lists) at the edge instead of in every microservice.

Supports RAG workflows (retrieval-augmented generation) so models can answer from your documents.

Offers intelligent caching and private model hosting to reduce repeated calls and protect sensitive workloads.

OpenAI Began Piloting Group Chats in ChatGPT

nexos.ai primary features

One interface for multiple LLMs (switch and compare in real time).

Access controls & AI guardrails at org, team, or model level.

Observability: centralized logs of prompts, responses, and costs.

Budget & token monitoring to manage spend.

Fallbacks, retries, and load balancing to keep production systems resilient.

Assistants & Projects for sharing repeatable workflows across teams.

What Challenge Does Generative AI Face with Respect to Data?

Pricing and trial (what to expect)

nexos.ai pricing is case-based and targeted at organizations — demo and custom plans are standard. The company promotes a free 14-day trial so teams can test premium features without a credit card, and enterprise quotes vary by usage, seat count, and private hosting needs. For detailed quotes you book a demo with sales. (This is typical for platform vendors that anchor pricing to consumption and compliance needs.)

Introducing Copilot Mode in Edge with Human-centered AI

Real-world mini case: customer support that must not fail

Situation: A SaaS support team runs a multi-model assistant to triage tickets in English and three other languages.

How nexos.ai helps:

AI Gateway routes high-confidence, low-latency requests to a faster open model for routine replies; complex legal or billing queries go to a higher-accuracy paid model.

Load balancing & fallbacks ensure that if Provider A rate-limits, Provider B takes over automatically so chatbots don’t go silent.

Observability tracks which model produced which reply, enabling audits and post-incident root cause analysis.

Result: Business continuity and a traceable audit trail for sensitive exchanges — with the same prompt history retained inside the Workspace.

This scenario shows how load balancing and observability work together: the former keeps service alive; the latter makes it explainable after the fact.

ChatGPT Atlas Browser Turns Browsing into a Chat-First Workspace

What I think many product teams overlook

Most articles explain that orchestration saves time; fewer explain the operational leverage that comes from shifting certain responsibilities off platform teams and into a central control plane:

Shorter feedback loops for model selection. Non-engineers can test models in Workspace and share Assistants; engineering only needs to connect to the Gateway once. That reduces the number of one-off integrations and speeds rollout of new model versions.

Policy as a first-class citizen. When guardrails live in the Gateway and Workspace, legal, security, and product teams can enforce constraints without millions of lines of app-level code changes.

Cost accountability without slowing innovation. Budget limits per team and token visibility let organizations let teams experiment while still capping runaway bills.

These are operational benefits that matter more as you scale from a handful of pilots to organization-wide AI adoption — and they’re not just product features on a spec sheet; they change who owns what in your org chart.

Google Workspace Integration 2025: Unlock Seamless Workflows with AI, APIs & Automation

Notes on accuracy and trust

Accuracy of outputs (nexos.ai accuracy) still depends on the underlying model chosen for a task. nexos.ai provides tools to compare outputs and mark trusted models, but it does not change the fundamental limits of the LLMs it routes to. Use the Workspace comparison feature and human review when building high-stakes assistants.

Observability is strong where the platform logs prompts and responses; however, organizations should verify retention windows, exportability of logs, and whether logs contain sensitive payloads. That determines whether records meet audit or legal retention policies.

Complete GoHighLevel Tutorial for Beginners: Master This Powerful CRM in 2025

Strengths and limits

Strengths

Central governance and per-team budgets.

Built-in redundancy (fallbacks, retries, load balancing).

Developer-friendly API with RAG and caching support.

Workspace that reduces shadow AI by giving employees a safe place to experiment.

Limits

Pricing is bespoke; you’ll need to validate total cost vs direct provider spending.

Accuracy remains model-dependent — platform control does not replace careful evaluation of outputs for regulated tasks.

Any third-party control plane adds an operational dependency; confirm SLAs and incident practices with vendor support.

Key Takeaways from nexos.ai Review

nexos.ai brings an integrated AI Workspace and AI Gateway so teams can use many LLMs with shared governance.

Observability and guardrails are designed to reduce data-leak risk and make AI use auditable.

Load balancing, fallbacks, and caching help keep production systems stable during provider outages.

Pricing is usage-driven and often custom — trial the platform and run a proof-of-value before committing.

Operational payoff comes from shifting policy and routing decisions to the control plane, shortening developer cycles.

ChatGPT 5 vs Claude vs Gemini vs Grok vs DeepSeek — Choosing the Right AI for the Right Task

FAQs (People Also Ask)

Q: What is nexos.ai used for?

A: It’s used to give teams a single place to access, compare, and govern multiple LLMs (AI Workspace) and to provide a single API for production routing and controls (AI Gateway).

Q: Does nexos.ai host private models?

A: Yes — the platform supports private model hosting options for sensitive workloads, allowing isolation from public providers. Confirm deployment options with sales for detailed network and hosting requirements.

Q: How does nexos.ai help with costs?

A: It provides token and budget monitoring, caching, and routing rules so you can route cheaper or cached responses for routine queries and reserve high-accuracy models for critical tasks.

Q: Is there a free trial?

A: The company offers a 14-day free trial to explore premium features and the admin controls without entering payment details.

Conclusion

If your organization uses more than one LLM provider, has compliance or observability needs, or wants an easier way for non-engineers to test models safely, nexos.ai is worth a proof-of-concept. Start with the 14-day trial (no credit card) and validate two things in your pilot: (1) whether observability meets audit needs, and (2) whether Gateway routing reduces operational toil compared with your current integrations. If those land, the platform can pay back quickly in less time spent on integration work and fewer security blind spots.

Try the free trial, run a small RAG or support-bot pilot, and measure whether central routing + guardrails reduce incidents and hidden spend.

Now loading...