Click here to buy secure, speedy, and reliable Web hosting, Cloud hosting, Agency hosting, VPS hosting, Website builder, Business email, Reach email marketing at 20% discount from our Gold Partner Hostinger You can also read 12 Top Reasons to Choose Hostinger’s Best Web Hosting

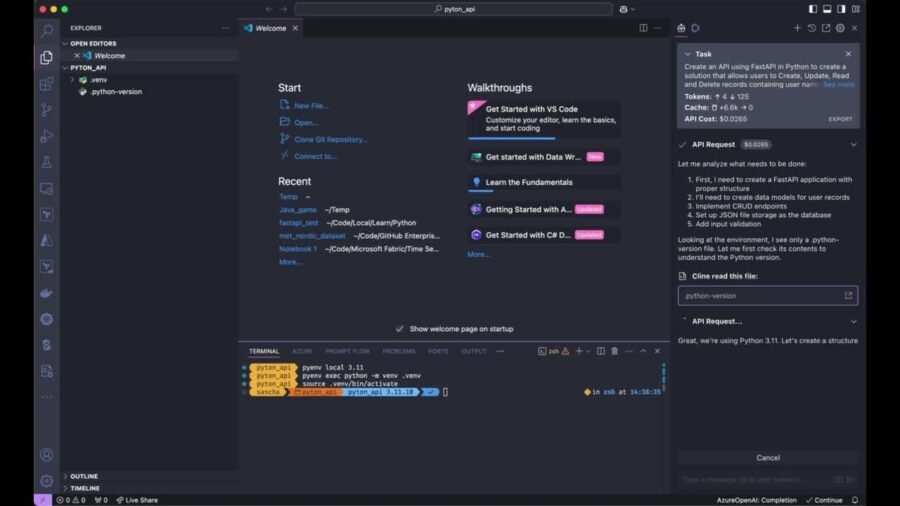

You want Cursor-style AI coding help — multi-file edits, code generation, and chat — but you don’t want to pay $20/month, or to send your code to someone else. That tension gets worse when your hobby project or client code contains sensitive data, or when you just want full control over models and costs. The good news: you can get the same features inside Visual Studio Code by combining two free/OSS extensions ( Set Up VS Code with Cline and Continue.dev) and running models locally with Ollama — or falling back to OpenRouter’s API. This guide walks you through the exact setup, how to configure models, how to switch to OpenRouter when credits run out, and why this stack is a better privacy-first Cursor alternative.

TinyMCE4 has no code editor plugin at default. This plugin is a code editor plugin called “tinymce4 code editor”, you can use it in your tinymce editor to toggle code quickly and edit code.

Why this stack? Quick overview

VS Code is free, widely supported, and extensible.

Continue.dev brings chat, autocomplete, and edit workflows to the IDE — and you can point it at any model or local server.

Cline (also spelled Cline.bot / GitHub

cline) acts as an autonomous coding agent inside VS Code — multi-file edits, file creation, running safe commands — and supports provider API keys (OpenRouter, Anthropic, OpenAI, etc.). Is ChatGPT AI Coder the Future of Development? Discover How It Solves Your Toughest Coding ChallengesOllama runs models locally so your code never leaves your machine; you pull models with the CLI and serve them to Continue/Cline.

OpenRouter is a handy API fallback when you exhaust free credits or when you need a hosted model.

Put together, these give you: Cursor features, local/private models, and cost control.

What you’ll build by the end

A working VS Code environment where:

Continue.dev provides inline autocomplete, chat, and edit workflows.

Cline acts as the multi-file agent that can create/edit files and run safe commands.

Ollama serves local models (or OpenRouter serves remote models) used by Continue/Cline.

What Is Vibe Coding and How to Start It? The AI-Driven Revolution in Software Development

Set Up VS Code with Cline and Continue.dev Step-by-step

1. Install Visual Studio Code

Go to the official site, download the installer for your OS and install. Use the stable build unless you want bleeding edge features from Insiders: (If you already use VS Code, skip to step 2.)

Tip: use the Extensions view (Ctrl+Shift+X) to find and manage extensions.

2. Install Ollama (local model host)

Ollama is the simplest way to run LLMs on your machine (it provides both CLI and a desktop app). Install from Ollama’s site, follow the platform instructions, then verify with ollama --help or ollama list. Once installed you can pull or run models locally using commands like ollama pull <model> or ollama run <model>.

Quick CLI examples:

Where deepseek-r1 and quen-2.5-coder are examples from the video — run ollama list or check Ollama’s library to see exact model IDs to pull. Use ollama pull <model-id> to download models before pointing Continue at them.

Test: open a small sample.py, start typing, and accept autocomplete suggestions with Tab. Use Continue’s chat to ask it to scaffold a file — it will call the model you configured.

5. Configure Cline (sign in + provider)

Cline typically asks for an account (app.cline.bot) and an API key. For local usage you can still use Ollama (if Cline supports local provider configuration) or set Cline’s provider to OpenRouter and paste your OpenRouter API key (see next step). When signed in, you receive trial credits with many providers; Cline will show a sidebar UI for prompts, multi-file operations, and permissions for running commands.

What is Fig Desktop (Now Sunset and Migrated to Amazon Q)

6. When credits run out: switch Cline to OpenRouter

If your Cline trial credits finish or you prefer hosted free models, create an OpenRouter account, create an API key, and paste the key in Cline’s provider configuration. OpenRouter gives a single API to many hosted models (free and paid). You can pick a larger hosted model (e.g., DeepSeek R1 equivalent) for heavier tasks — trade-off: latency and occasional queue/timeouts.

Mini case study — Build a retro Flappy Bird

In the demo video the author gave a single prompt to Cline to create a Flappy Bird game (HTML/CSS/JS). Cline generated the project files, created index.html, styles.css, and game.js, and even ran a basic check. Continue handled inline autocomplete and small edits. Outcome: playable prototype delivered with one prompt and minimal manual file editing — and no code left a local machine when Ollama was the model provider.

Lessons:

Auto-approve lets agents run safe commands automatically — use cautiously.

For heavy refactors or large codebases, prefer local models or rate-limit hosted models to avoid timeouts.

Always review generated code; AI is fast but not perfect.

Troubleshooting & best practices

Models fail to download / out of disk? Remove unused models with

ollama rm <model>or move Ollama’s model directory to a larger drive.High latency on OpenRouter free models? Try smaller hosted models or run a local Ollama model. Expect slowdowns at peak times.

Cline asks for an API key but you want local only? Check Cline docs — it can use local providers or empty keys if the plugin supports direct Ollama endpoints. If not, use Continue for local work and Cline for provider-backed agent tasks.

Security: do not paste production secrets into prompts. Use

.envand secret placeholders when possible.

Key Takeaways

You can replicate Cursor’s best features in VS Code by combining Continue.dev (chat/autocomplete), Cline (agent & multi-file edits) and Ollama (local models).

Local models = privacy + cost control. Ollama lets you download and run models on your machine; use

ollama pullandollama run.OpenRouter is a convenient backup when local models aren’t possible or when you exhaust trial credits.

Auto-approve and autonomous agents are powerful but risky. Use them with consent and code reviews.

Pick model size wisely (smaller models often deliver practical autocomplete and are cheaper/faster).

Mastering ChatGPT Deep Research Mode: A Step-by-Step Guide for In-Depth AI-Powered Research

FAQs (People Also Ask)

Q: Do I need a GPU to run Ollama models?

A: No — many models run on CPU, but larger models (13B+) perform much better with GPUs or at least a modern CPU and lots of RAM. For casual use pick 1.5–7B models.

Q: Which models should I pull for coding tasks?

A: Start with smaller, code-tuned models (the Ollama library shows code-specialized models). The demo used a small “coder” model for autocomplete and a chat model for reasoning. Use ollama list and ollama show <model> to inspect.

Q: Can Cline or Continue access my remote repos?

A: They operate inside VS Code and can read the files you open. They do not automatically send code to third parties if you use local models — but if you use OpenRouter or other hosted providers, code/context is sent to that provider.

Q: Are there privacy guarantees?

A: Local Ollama models keep data on your machine. Hosted providers (OpenRouter, Anthropic, OpenAI) depend on their policies — check the provider’s privacy docs and your own internal policies before sending sensitive code.

Conclusion

If you want Cursor-like power without the subscription, set up VS Code with Continue.dev and Cline and choose Ollama for local models (or OpenRouter as a fallback). This stack is privacy-first, flexible, and—once set up—fast to iterate with. Try it on a hobby project first: install VS Code, pull a small Ollama model, install Continue and Cline, and ask the agent to scaffold a tiny project. You’ll be surprised how much you can do for free while keeping your data local.

Official resources

VS Code — download and docs

Ollama — run models locally and docs/library

Continue.dev docs & extension — VS Code integration and agent features

OpenRouter — unified API for hosted models (use as fallback)

Now loading...